A Framework for Web (of Things) Agents

Hypermedea is a framework to develop autonomous agents that are capable of navigating the Web. Autonomous agents are particulary relevant as controllers on the Web of Things, e.g. in building automation systems or for industrial control.

Hypermedea follows the multi-agent oriented programming (MAOP) approach, which provides several abstraction layers, from the embodiment of agents in an environment to their coordination via agent organizations. Hypermedea provides utilities to program agents against a particular environment: the Web.

In MAOP, an agent perceives and acts on its environment via proxy entities called Artifacts. Hypermedea is composed of four artifacts. This tutorial introduces them one by one.

Linked Data Navigation

The first action that all Web agents perform is Web navigation.

Hypermedea focuses on Linked Data navigation: crawled resources have an RDF representation.

The Linked Data artifact exposes an operation to dereference a URI, i.e. a GET operation (get/1), and keeps its RDF representation in a knowledge base.

The artifact also keeps track of visited resources (visited/1) and pending dereferencing operations.

The following code snippet (in the Jason agent programming language) performs a GET operation on a known URI and prints the number of received RDF triples.

+!run <- get("https://territoire.emse.fr/kg/emse/fayol") . +visited(URI) <- !countTriples . +!countTriples <- .count(rdf(S, P, O), Count) ; .print("found ", Count, " triples.") .

This first program should read as follows:

- once the agent has the intention to run (

!run), it calls a GET operation on the entry point to the Fayol Knowledge Graph, an RDF dataset describing the building of Institut Fayol at Mines Saint-Étienne; - once the agent believes the resource has been visited (

visited(URI)), it has the intention of counting triples (!countTriples) - once the agent has that intention, it executes a sequence of two actions: it first counts the number of RDF triples included in its belief base (

rdf(S, P, O)) and then prints that number.

Jason is a Belief-Desire-Intention (BDI) agent programming framework. Beliefs are ground predicates that represent the internal state of the agent. Desires are goals that the agent has in reaction to particular events (either triggered by perception or by internal actions). Intentions are the association of a goal and a plan that the agent believes can be executed and achieves the goal.

A Jason program is a collection of rules Event <- Plan that associate events to possible plans.

Events are the addition or deletion of a belief or a goal.

For example, +visited(URI) is the addition of a belief (triggered by the Linked Data artifact) while +!countTriples is the addition of a goal (triggered by another plan).

A plan is always a sequence of actions (separated by ;).

In the above program, .count(...) and .print(...) are internal actions, provided not by an artifact but by the Jason standard library.

The Linked Data artifact processes GET operations asynchronously: an agent can call several GET operations in parallel and wait for +visited(URI) events to be triggered (or pursue other goals).

As a result, Hypermedea agents can efficiently crawl a given Knowledge Graph, i.e. to discover their environment and the possible actions their environment affords.

The following program crawls any Knowledge Graph given its entry point and known vocabularies (see initial intention !crawl(URI)).

knownVocab("http://www.w3.org/ns/sosa/") . knownVocab("http://www.w3.org/ns/ssn/") . knownVocab("https://w3id.org/bot#") . knownVocab("https://www.w3.org/2019/wot/td#") . knownVocab("https://example.org/ontology#") . +rdf(S, P, Target)[crawler_source(Anchor)] : crawling <- if (knownVocab(Vocab) & .substring(Vocab, P, 0)) { + barrier_resource(Anchor, Target) } . +!crawl(URI) <- +crawling ; get(URI) ; for (knownVocab(Vocab)) { get(Vocab) } . // event generated by the LinkedDataArtifact +visited(URI) : crawling <- for (barrier_resource(Anchor, URI)) { if (not visited(URI) | toVisit(URI)) { get(URI) } } ; if (crawler_status(false)) { -crawling } .

In the above program, all discovered RDF triples are considered as hyperlinks pointing to other Web resources. For a given triple, the agent navigates from the anchor resource to the target resource (object) only if the predicate URI matches the namespace of some known vocabulary. This program may not terminate; other Jason rules could be added, e.g. to restrict link traversal to a finite number of hops.

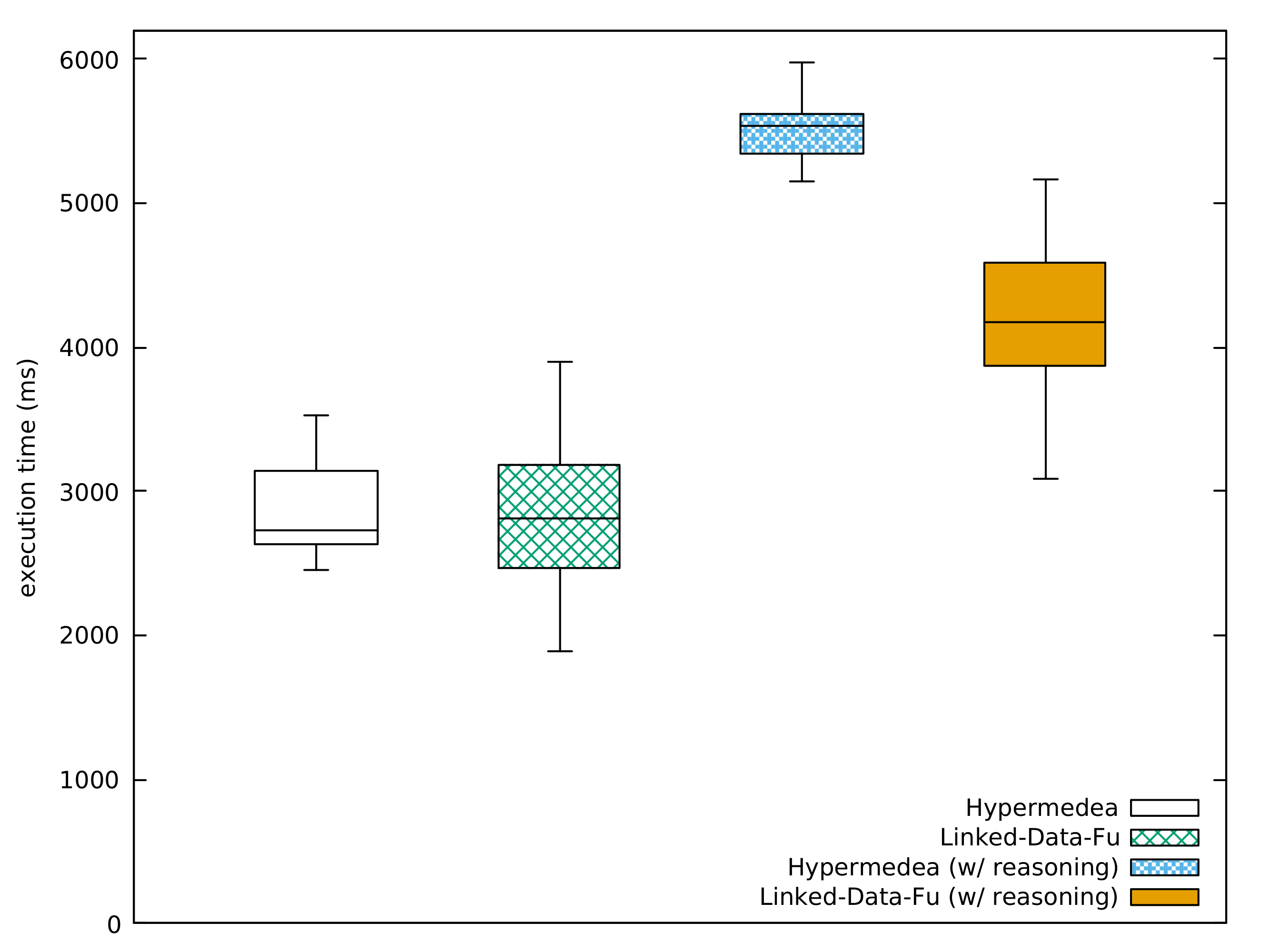

Jason is a Turing-complete programming language. Agents may execute any navigation program. Yet, the event-based execution model of Jason has proven efficient compared to dedicated Linked Data crawlers such as Linked-Data-Fu. The above program is as fast as Linked-Data-Fu to crawl the Fayol Knowledge Graph (7,380 RDF triples contained in 290 interlinked resources).

More details on how to use the Linked Data artifact is given in the Javadoc of LinkedDataArtifact.

See also examples/fayol in the Hypermedea distribution.

Automated Reasoning

While crawling the Fayol Knowledge Graph, the ontology artifact performs two tasks.

It turns ternary rdf/3 predicates into unary and binary predicates corresponding to class and property assertions.

It also materializes implicit assertions based on the OWL axioms found in the KG.

The first of the two tasks is purely syntactic sugar: it allows programmers to write plans based on well-known ontologies. For instance, an agent may have to look for specific equipment in the Fayol building. In Jason, derived predicates may be expressed in a Prolog-like syntax. Writing RDF triples may be cumbersome in Prolog. Instead, a programmer can use short names (generated by a pre-defined naming strategy), as in the following example.

// plain RDF form targetEquipment(Machine) :- rdf("https://territoire.emse.fr/kg/emse/fayol", "https://w3id.org/bot#containsElement", Machine) & rdf(Machine, "http://www.w3.org/1999/02/22-rdf-syntax-ns#type", "http://www.productontology.org/id/Filler_(packaging)") . // equivalent short form based on OntologyArtifact properties targetEquipment(Machine) :- containsElement(fayol, Machine) & 'Filler_(packaging)'(Machine) .

Here, the agent's target equipment is a filling machine.

There is indeed a filling machine in the Fayol building (on ground floor, room 012).

Yet, for targetEquipment to be derived, some reasoning is required.

The ontology artifact must first discover that the Fayol building has a ground floor, which itself contains elements, among them a filling machine.

From these three assertions and axioms on OWL properties bot:containsZone and bot:containsElement, a reasoner can infer that the Fayol building indeed contains the filling machine.

The ontology artifact's second task is to perform incremental reasoning (as new RDF triples are added to the knowledge base by the Linked Data artifact).

If reasoning is active, the artifact automatically materializes any ABox assertion that the OWL reasoner finds, e.g. containsElement(fayol, dx10).

Hypermedea's default reasoner is HermiT.

When reasoning is active, crawling the Fayol Knowledge Graph takes 5-6s while it takes 2.5-3.5s without reasoning. In that respect, Linked-Data-Fu performs better, averaging to 4s with reasoning. However, it is important to note that Linked-Data-Fu supports only rule inference: its OWL reasoning procedure is not complete. In the present example, all necessary axioms to discover the DX10 filling machine can be equally expressed as rules. More advanced reasoning may however be useful in practical applications.

For instance, the agent may need to know what other machines are spatially connected to the filling machine, in order to properly control it. Qualitative spatial reasoning (CRR-8, for instance) can be used to infer such relations. Spatial reasoning can be embedded in OWL DL but correspondence requires axioms beyond rule inference.

More details on naming strategies and inferred assertions can be found in the Javadoc of the OntologyArtifact.

Action

An agent that targets a specific kind of equipment is called a resource agent. Its main role is to execute specialized plans to control a manufacturing resource. A resource agent generally has several alternative plans to execute the same manufacturing process, e.g. to autonomously recover from machine failures or to handle polymorphic control interfaces. Let us start with the simplest possible agent that is programmed against the DX10 machine only.

The purpose of a filling machine is to fill empty containers with matter (e.g. yoghurt) stored in a tank. Its high-level process is as follows:

- bring an empty container under the tank

- open the tank to fill the container

- move the filled container away from the tank

On the DX10 filling machine, most of the process is executed locally by a Programmable Logic Controller (PLC). The agent may only perform two control actions: it can change the speed of the machine's belt conveyor (to move items along a fixed axis) and it can press an emergency stop that stops PLC execution. The agent may also read several state variables, e.g. the current fill level of the machine's tank.

The DX10 machine's interface is exposed in a standard Thing Description (TD) document. The TD model is a W3C standard for exposing so-called Things on the Web. On the Web of Things, connected devices acting as Web servers are modeled by means of their exposed properties (that clients can read and write), the actions they can perform on the physical world (that clients can invoke) and the events they can detect (that clients can subscribe to). A TD document being an RDF document, its URI can be dereferenced by the Linked Data artifact while crawling. For the DX10 machine, the agent is informed of the following interface:

| Operation type | Name |

|---|---|

readProperty |

tankLevel |

readProperty |

stackLightStatus |

readProperty, writeProperty |

conveyorSpeed |

invokeAction |

pressEmergencyStop |

All possible operations on the machine are called affordances and are described as Web forms (with additional schema information on the expected and returned data). To perform an action, the agent only has to fill in the form with valid input data. If the agent intends to start the conveyor belt, it must submit a single number according to the DX10 machine's TD (corresponding to a speed in m/s).

To keep action as simple as possible, a Thing artifact is created for every TD found in the knowledge base.

This artifact exposes readProperty, writeProperty and invokeAction actions that turn an agent's input into a request to the server and that handles the server's response.

The Thing artifact also performs input validation and deals with server authentication, if required.

The following snippet shows how the agent can start the DX10 machine's conveyor belt.

!start : targetEquipment(Machine) <- // an instance of ThingArtifact is automatically created from the crawled TD document writeProperty("conveyorSpeed", 1)[artifact_name(Machine)] .

The term artifact_name(Machine) matches an annotation that is automatically added when the artifact is created.

It is useful to resolve conflicts in case two discovered Things expose the same affordances (e.g. two different filling machines in the same manufacturing line).

See the Javadoc of the ThingArtifact

and examples/itm-factory in the Hypermedea distribution.

Automated Planning

In the ideal case, all filling machines expose the same affordances, such that the agent knows a single plan to control them all.

In the above example, the agent must know from out-of-band communication that the DX10 machine has a property affordance called writeProperty.

It is in fact the purpose of TD Templates to enforce a particular interface for all devices of a certain kind.

In reality, though, industrial equipment is rarely standard. There are even good results to maintain a certain heterogeneity in cyber-physical interfaces. On the one hand, machines do not necessarily execute the same physical process to achieve a goal: the DX10 machine is a flow filler but there exists at least six other forms of filling machines (according to Wikipedia). On the other hand, the maximum level of control of that process is obtained if low-level control variables are exposed via a TD.

The resource agent may therefore have to recompose operations on low-level control variables into higher-level actions that are common to all machines targeted by the agent. That is why Hypermedea also includes an automated planning artiafact.

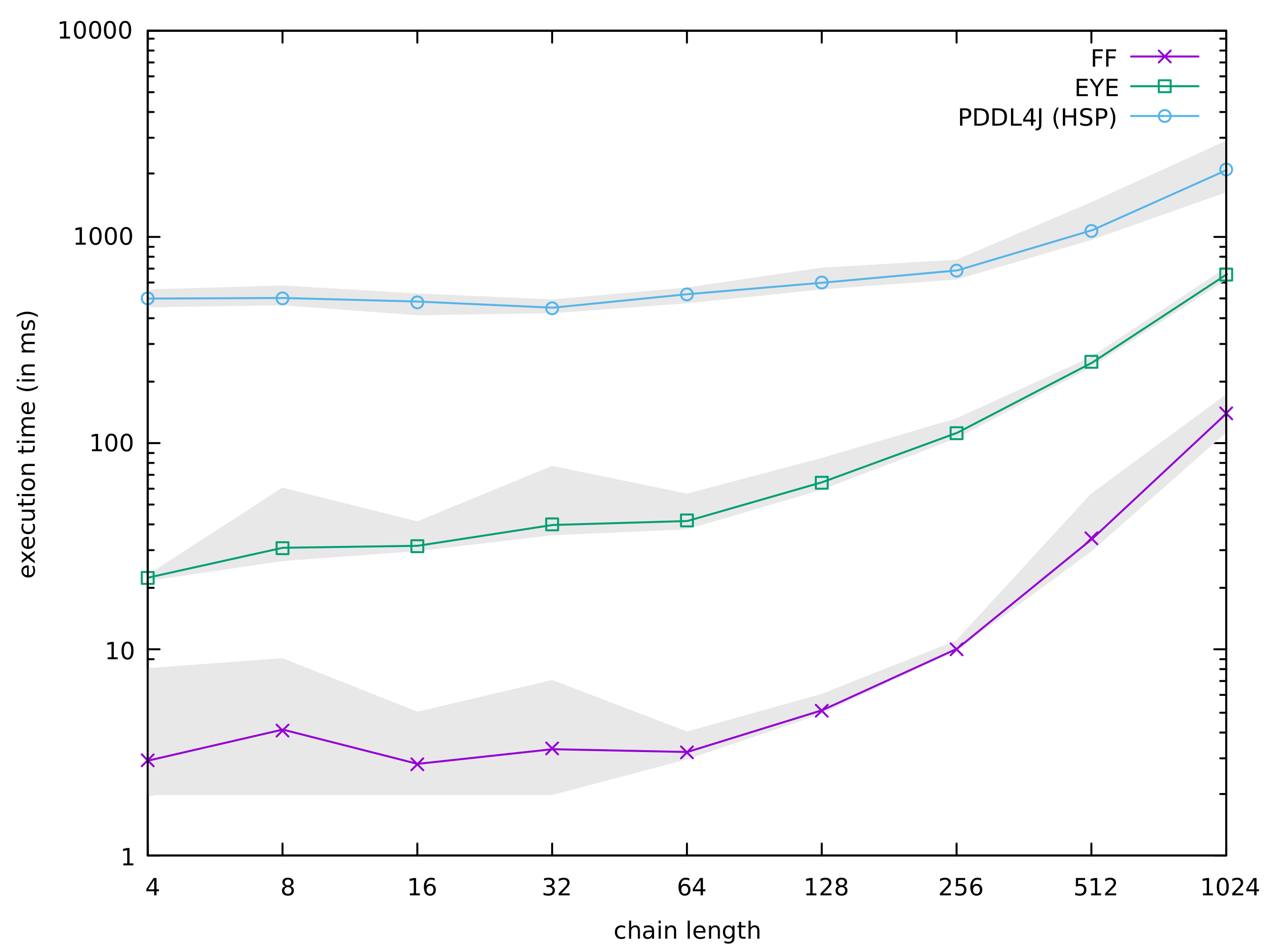

Automated planning could be performed by an OWL reasoner. OWL DL can indeed embed a modal representation of actions, given a modality of necessity. However, planning is known to be extremely costly in terms of computer resources. Instead, the planning artifact hosts a dedicated planner that accepts input in the Planning Domain Definition Language (PDDL). A comparison on a basic Web service composition benchmark shows that a well-known PDDL planner (FF) is a hundred times faster than a general-purpose reasoner (EYE) for finding a plan. The objective of the benchmark is to find a chain of requests of increasing length from an initial state to a given goal state. (Note: EYE is used here instead of OWL reasoners because prior work on Web of Things systems have used it for planning.)

Hypermedea's planner artifact has a single operation to synthesize plans (buildPlan/2), as shown in the following example.

domain(domain("dx10", [

action("writeProperty", ["conveyorSpeed", "?value"],

hasSubSystem(dx10, "?conveyor"),

hasValue(dx10, "?value"))

])) .

problem(problem("start", "dx10", [

hasSubSystem(dx10, conveyor)

], hasValue(conveyor, 1))) .

+run : domain(Domain) & problem(Problem) <- buildPlan(Domain, Problem) .

// plan synthesized by PlannerArtifact

+plan("start", Plan) <- .add_plan(Plan) .

The planner artifact exposes synthesized plans using the plan/2 predicate, associating a goal with a sequential plan.

It is then up to the agent to apply an execution model to the plan.

The Jason language supports meta-programming, such that that the agent's plan library can be modified at runtime, as done above with .add_plan/1.

In the following example, meta-programming is used in a slightly more elaborate fashion to take into account the fact that actions may be durative.

+plan(sequence(_, Seq)) : counter(I) <- !executePlan(Seq, 0) . +!executePlan(Seq, I) : .nth(I, Seq, Action) <- Action ; !executePlan(Seq, I+1) .

Planning also brings more flexibility against previously unseen failures. The agent may have a generic recovery strategy that consists in synthesizing a plan before attempting to execute it.

See the Javadoc of PlannerArtifact and examples/planner in the Hypermedea distribution.

The performance evaluation presented here have been published in a WWW '22 demo paper. The industrial scenario developed in this tutorial is being used in the ai4industry series of summer schools.